Rai on AI & Work: AI will augment work, not replace it, making data professionals more valuable than ever

Rai on the next breakthrough: The next leap will come from agents with memory and context to execute complex tasks

Rai on SaaS & AI: SaaS will shift from static tools and dashboards to truly AI-native platforms

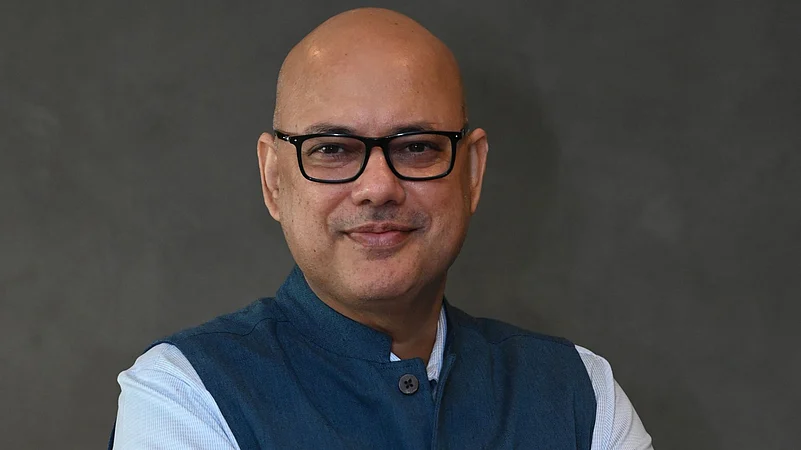

The era of simply throwing more compute and data at pre-training to build ever-larger foundation models is ending, says AI data cloud platform Snowflake’s India MD Vijayant Rai. He explains that newer scaling laws show that high-quality training data is running out and training extremely large models has become too slow, costly, and inefficient.

“Innovation is now shifting toward post-training techniques and specialisation,” says Rai.

In an interaction with Outlook Business, Rai shares his insights on how the AI business is changing, and how the focus is now shifting from developing more and more sophisticated foundational models to smaller, more cost efficient models suited to specific tasks.

Edited Excerpts

We often talk about India being an extremely price-sensitive market, and we’ve now seen OpenAI introduce a ₹399 plan as well. Are you considering market-specific pricing for India?

India is one of the fastest growing developing economies in the world. We are seeing some amazing use cases happening in India thanks to the scale at which India operates and where we are today. So, from a market perspective, it is an absolutely strategic market for Snowflake. And whatever it takes in terms of making sure we are able to provide the best value proposition to our customers, we ensure that.

What we recently, not recently, it’s almost a year now, did is create an INR pricing option for our customers, an Indian rupee regime for them to transact in. And that’s a big one because most American companies operate in US dollars, which obviously takes away valuable foreign exchange going out. So, we’ve created an India entity to ensure that customers can transact in INR and in the local currency, with Indian jurisdiction and everything else there.

The other interesting piece is that we operate on a consumption model, which means customers pay only for what they consume. The Snowflake AI Data Cloud is a fully managed data cloud available across major hyperscalers, and whatever customers consume, whether data, storage, or compute, is the only thing they are charged for.

Second, you don’t have to worry about the underlying charges that a hyperscaler like Azure or AWS would normally impose. Snowflake absorbs all of that in our pricing model.

There is a growing consensus that SaaS will be replaced by AI. How do you see the SaaS landscape being reshaped over the next few years?

AI isn’t replacing SaaS, it’s fundamentally reshaping it. Over the next few years, SaaS will evolve from static tools and dashboards to truly “AI-native” platforms: apps that embed AI agents and copilots to automate workflows, deliver proactive insights, and drive real business outcomes. Instead of simply providing software for users to operate, the next generation of SaaS will leverage data and AI to anticipate needs, make decisions, and even take action autonomously.

With so many foundation models in the market, do you expect consolidation? Where do you see horizontal model players winning, and where will vertical or domain-specific models gain an edge?

While we expect continued consolidation among foundation model providers - driven by massive compute and data cost requirements - the AI landscape is also opening up. Horizontal models will power broad, general-purpose commodity use cases and platform integrations, where coverage and ease-of-use matter most, but the pace of innovation in open source and vertical models is accelerating, as teams refine, customize, and deploy specialized solutions using domain-specific data. This democratization is breaking old monopolies and fueling distributed AI development, especially in high-stakes sectors where accuracy, compliance, and trust are paramount.

However, the real frontier for AI lies in agentic systems. In the coming year, innovation will focus on agentic AI, intelligent and integrated agents with robust context windows and persistent, human-like memory. These advances will help systems learn from past actions, operate autonomously over long horizons, and provide continuous, dynamic support, fundamentally moving beyond single interactions. The next major breakthroughs will be driven not just by bigger models, but by giving agents the memory and context they need to execute complex, enterprise-scale workflows.

Is the era of “big models only” ending?

The era of simply throwing more compute and data at pre-training to build ever-larger foundation models is ending. In 2025, established scaling laws, like the Chinchilla formula, revealed that we’re reaching the limits: high-quality pre-training data is increasingly scarce, and required token horizons for effective training have become unmanageably long. As a result, the race to build the “biggest model” is slowing down.

Innovation is now shifting toward post-training techniques and specialization. Leading organizations are dedicating as much as 50% of their compute resources to refining, tuning, and adapting models, using methods like reinforcement learning and domain-specific datasets to dramatically improve task performance. In 2026, success won’t be defined by model size, but by how effectively a model can be specialized and operationalized for real-world needs.

Small and specialized models are competing, and often winning, in production because they’re more efficient, cost-effective, customizable, and easier to deploy at scale. They excel in use cases where latency, privacy, regulatory compliance, and precise outcomes are critical, often outperforming massive models on specific, well-defined tasks.

There’s a growing concern that AI will replace conventional jobs.

I believe AI will enhance work, not replace it. In fact, over the next five years, we’ll see AI making data professionals more valuable than ever. With agentic AI, repetitive tasks such as data cleaning, boilerplate feature engineering, or model tuning will be increasingly automated. This will free data scientists and analysts to focus on higher-value work: asking the right business questions, designing innovative solutions, and interpreting results with real business context. In other words, they’ll spend less time on the “how” and more time on the “why” and “what if”.

AI models are only as good as the data powering them, making data every company’s most valuable asset. There are two major trends that will help organizations transform the data engineering discipline into what will unlock this value. The first is that data engineers need to be able to look holistically to see how and what data is required to light up critical insights. To succeed, enterprises need real-time access to high-quality data, which positions data engineers into a more strategic role. Their insights are increasingly shaping business decisions, making it essential for them to understand the broader business context and the needs of their customers. The organizations that will thrive are those that treat data engineers as key business partners, integrating their expertise to ensure data drives success.

Many AI leaders say the industry is shifting toward outcome-based pricing. From your vantage point, what are clients actually asking for?

Just to clarify Snowflake’s positioning, we are an enterprise-focused company. We largely work with enterprise organisations and digital-native companies, not so much on the consumer side. So, when you look at products like ChatGPT or ChatGPT Go, their focus is typically on consumers. In our case, for enterprises to get value, we create different pricing models that suit their needs.

Outcome has always been a part of enterprise decision-making. Even earlier, enterprises would look at the outcome they expect and evaluate whether they will get ROI on the investment they’re making for that outcome. Outcomes vary: in financial services it could be customer experience or risk management; in other industries, it could be something entirely different depending on what they aim to achieve.

What we try to do, because we want our customers to realize value from the Snowflake AI Data Cloud, is align with whatever outcome they are aiming for. For example, if you're an airline looking to increase passenger load and you're using data and AI for analytics or other applications, we make sure that the model we propose is closely tied to the outcome you're trying to achieve and is positioned to deliver value.

In my view, outcome-based pricing is a good approach. It helps vendors like us ensure that customers get a clear ROI, and when customers see that value, they naturally end up consuming more, which benefits both sides.

How much of your total revenue currently comes from India? And could you talk a bit about your R&D presence in India? You had earlier mentioned plans to set up an R&D centre.

We already have one in Pune. Specific to India, we don’t provide country-level numbers since we are a listed company. But India is one of the fastest-growing markets for Snowflake globally in terms of year-on-year growth.

We doubled our GTM team in the past year. We also have a large Centre of Excellence in Pune supporting global customers, though I don’t have specific updates on R&D expansion yet.

How do you track AI revenue?

The way we look at AI is essentially in terms of the number of messages—you can call them tokens or messages. It’s very easy to track usage on the platform whenever someone uses AI.

Today, AI usage works like this: you have your data on the Snowflake AI Data Cloud, and you have different LLMs available—OpenAI, Anthropic, Meta, Gemini, and others. All of these are available directly on the platform. Unlike consumer LLM usage, enterprises have a crucial requirement: their data must remain safe. The data they use for inferencing must also be secure so that they aren’t liable later.

Our design philosophy keeps this front and center. All your data stays within the Snowflake platform. We bring the LLMs to the data.

We offer customers all the latest LLM models with interfaces they can use easily. If they have a use case that requires Anthropic, they can use that. Billing is seamless—they don’t have to worry about it; usage flows through compute.

You launched Arctic, your own language model, earlier this year. This comes at a time when you also have integrations with multiple open language models. What made you launch Arctic?

Arctic is an enterprise-grade LLM designed specifically for enterprise use cases. It complements models like Anthropic or OpenAI. Customers now have multiple choices depending on the use case: coding, analysis, summarisation, etc.

What are Snowflake’s plans for India in 2026?

We will continue to scale our GTM organisation, expand our partner ecosystem, support more ISVs building on Snowflake, and heavily invest in skilling. India has one of the world’s largest developer ecosystems, so we aim to skill 100,000 professionals through NASSCOM and ICT Academy in the next few years.