OpenAI is hiring Head of Preparedness with salary of $555,000 plus equity

Role is response to AI challenges: mental distress & security vulnerabilities

Leader will manage risks in cybersecurity, biology & autonomous replication

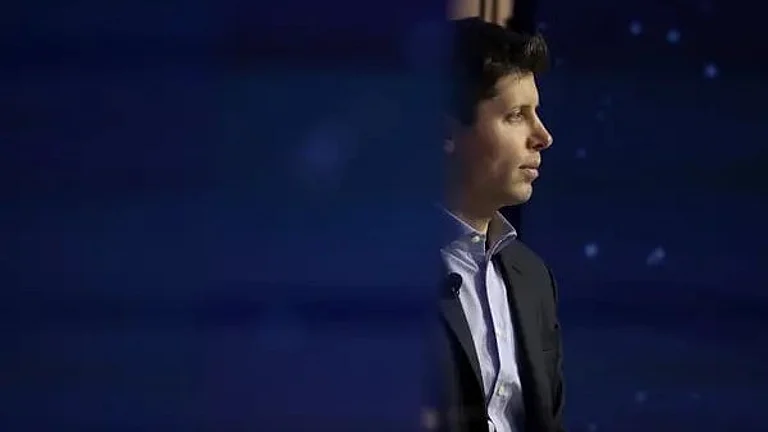

OpenAI on Tuesday announced a major hiring push for a new senior position, the Head of Preparedness, offering total cash compensation of $555,000 plus equity. The role, unveiled by CEO Sam Altman, is described as mission critical as model capabilities accelerate and new forms of potential harm emerge.

The selected candidate will be tasked with building and operating OpenAI’s preparedness framework from end to end. This includes designing capability evaluations, developing threat models across areas such as cybersecurity and biology, and coordinating mitigation measures that feed into product launch decisions and broader safety policy. Altman said the role will require strong technical judgment, close cross-functional collaboration, and the ability to translate evaluation outcomes into practical operational safeguards.

Role Details

Altman framed the hire as a response to what he described as “real challenges” the company has observed this year, pointing to early signs that AI is affecting mental health and becoming powerful enough to expose serious security vulnerabilities. He warned that the role would be high pressure, saying the person would be thrown into complex problems almost immediately and emphasised that preparedness work must strike a balance between preventing misuse and preserving the beneficial applications of AI.

The new Head of Preparedness will lead a small, high-impact unit within OpenAI’s Safety Systems organisation, which already builds evaluations, safeguards and safety frameworks for frontier models. The team’s mandate includes developing rigorous and scalable assessments of model capabilities, establishing threat models, designing mitigation measures and ensuring that these findings directly inform governance decisions as well as product and policy choices.

Deliverables

The role will involve overseeing precise and repeatable capability evaluations, steering the design of mitigations for major risk areas such as cybersecurity and biological misuse and ensuring preparedness insights are embedded into model launches, post-deployment monitoring and enforcement. It will also require close collaboration across research, engineering, product and policy teams, as well as with external partners, to ensure safeguards function effectively in real-world settings.

OpenAI’s hiring push comes amid heightened scrutiny of the real-world impacts of advanced chatbots. Company audits and reporting earlier this year highlighted hundreds of thousands of users showing signs of severe mental-health distress in interactions with AI systems, while regulators and security experts have warned of rising AI-enabled risks, including sophisticated phishing and vulnerability discovery. These developments have prompted leading AI firms to step up investments in risk-reduction programmes and preparedness teams.