In late January this year, Swifties were in for a shock. Pornographic images of their icon had flooded social media platforms. They were quick to react and forced the platforms to take down the images. But not before around 47 million people around the world saw them.

Taylor Swift is not alone. In February, pornographic images of popular American podcaster and influencer Bobbi Althoff were uploaded on X (formerly Twitter) and garnered over 4.5 million views in just nine hours.

The problem is not just intrusion of privacy but that these images are fakes generated using artificial intelligence (AI) and are called “deepfakes”. Celebs form the most-viewed cohort among the victims. Scarlett Johansson, Kristen Bell, Nora Fatehi, Rashmika Mandanna, Samantha Ruth Prabhu, Shraddha Kapoor, Tom Hanks and Sachin Tendulkar are among the celebrities who have had their deepfake videos or photos circulated online. While those of men are used to market various business plans, the women’s are of a pornographic nature.

Disturbing Data

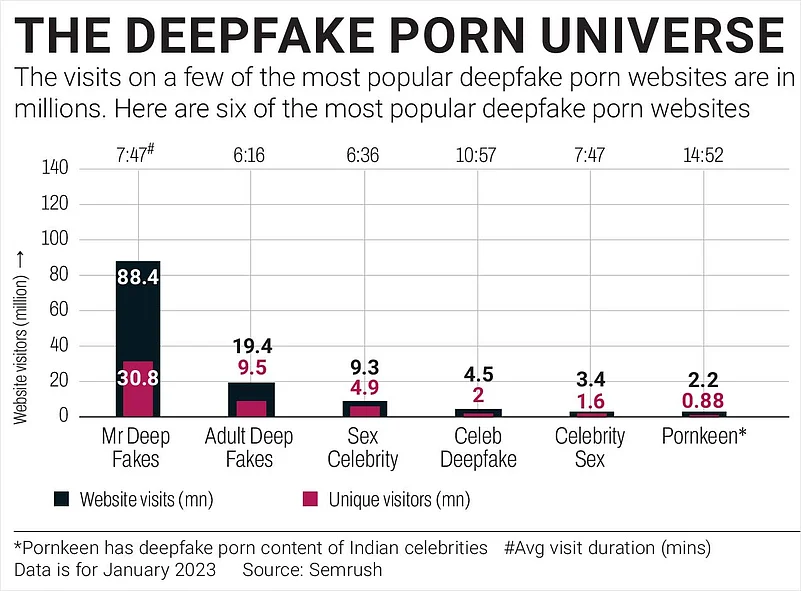

The numbers are a cause for worry: MrDeepFakes, a website, had 88.4 million visits in January alone, according to Semrush, a US-based online traffic analytics service. The number of synthetic adult videos has increased 24-fold between 2019 and 2023. Last year 143,868 new deepfake videos were uploaded online, according to Genevieve Oh, a South Korea-based independent data analyst for live-streaming platforms. “More deepfake videos have been posted online in 2023 than every other year combined,” she says and goes on to add that there are over 40 dedicated websites and 61 “nudifier” apps—apps used to edit photos to show the subject without clothes—the majority of which have sprung up in 2023.

One of the worrying trends that have popped up in recent years is the proliferation of websites exclusively for celebrity porn videos. MrDeepFakes is the most viewed among these websites. It features faces of celebrities grafted over the bodies of porn stars. Anyone can post a paid request for a celebrity deepfake on the forum section of the website.

Another website, AdultDeepFakes, features actresses, YouTubers, television personalities and other celebrities. In January, the website had 19.4 million visits, of which 9.5 million were unique visitors.

A report by Centre for International Governance Innovation, a think tank, says that 96% of deepfake images are pornographic in nature, and 99% of those target women. In a study titled 2023 State of Deepfakes, Home Security Heroes, a team of online experts, found that 94% of the individuals featured in deepfake pornographic videos are affiliated with the entertainment sector.

Difficult to Detect

Swapping faces is not a new phenomenon. Earlier Photoshop and similar software were used. The current technology is far more dangerous than the previous one also because the similarity quotient between the real and the fake is so high that the difference is imperceptible to a human eye. “The major concern around deepfake is the way it makes use of images of real people to generate either a hyper-realistic image or superimpose a real face on to another body,” says Darshana Sreedhar Mini, assistant professor at the University of Wisconsin-Madison, who has worked on the subject of pornography.

With deepfake technology, the creation of revenge porn videos is on the rise. Perpetrators use face-swapping websites or apps which use machine-learning algorithms and neural networks to swap faces. Photos or videos of the victims are taken from social media to record their voice, facial expressions and body movements and are uploaded on one of the AI-based tools.

The images created are then used to blackmail the target. The ransom amount ranges between Rs 5 lakh and Rs 10 lakh, according to experts. If the victims do not give in to the demands, the images are usually circulated on social media.

There are ways perpetrators can hide their identity using secured virtual private network (VPN) connections. “To hide their IP [internet protocol] address, they use a VPN account. It is nearly impossible to track these criminals,” says Ummed Meel, a cyber security professional.

Payments are received in digital wallets and in cryptocurrencies, according to him.

Made in India

While India does not have a dedicated porn industry, the country is not far behind when it comes to adult deepfake video production and consumption. “We do not have a full-fledged porn industry in India, except amateur porn as well as videos uploaded through aggregators,” says Mini of University of Wisconsin-Madison.

Although the exact number is hard to come by, dedicated deepfake porn sites based in India do exist. Pornkeen—featuring Bollywood actresses—catered to 22 lakh visitors in January. Another one, Bollyxxx, had 4.84 lakh visitors in the same month.

MrDeepFakes has a forum for catering to requests by users. The deal is often finalised on Telegram. An Indian creator on the website by the username @xkingmr charges Rs 500–650 for a video of a celebrity available in his stock. When asked to make a deepfake porn of a celebrity whose video has not yet been created, he demanded Rs 1,200–1,500.

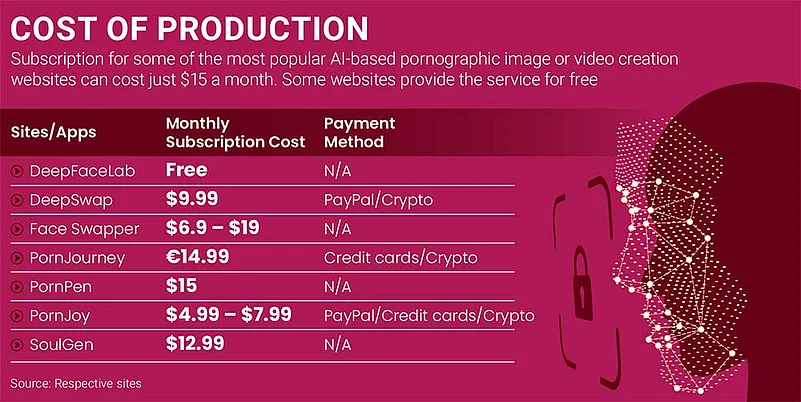

A subreddit, Unstable Diffusion, earns $25,000 a month from several hundred donors who create AI-generated porn which is then sold to consumers through the server’s Patreon account, according to technology news website TechCrunch. Many porn-generating platforms provide subscriptions through anonymous Discord or Patreon accounts. The subscription plans on these deepfake image- or video-generation websites go up to $15 a month.

The majority of the income on MrDeepFakes is concentrated in the hands of the most active career deepfakers. “Within six months, one of the leading creators earned $74,000 from 75 subscribers,” Oh says.

According to her, India is one of the fastest-growing regions in traffic. “Out of the total traffic on the top 40 dedicated deepfake-hosting websites, India represents 14.5% of the monthly traffic; Indian-targeting content [on MrDeepFakes] got over 33 crore views [between February 2018 and February 2024], representing 18.5% of the content,” she says.

Deepfake has become a way to earn quick money. As a result, multiple video tutorials and step-by-step guides for how to utilise these apps have emerged. They also provide recommendations and ratings to the available apps. The ease with which these can be created is evident in the Home Security Heroes report which says that a 60-second deepfake pornographic video can be produced for free in less than 25 minutes.

Handy Tools

The first pornographic deepfakes appeared in 2017 on social networking platform Reddit. Using the large number of images available on the internet, a user uploaded fake pornographic videos of celebrities. Gal Gadot, Emma Watson, Katy Perry, Taylor Swift and Scarlett Johansson were the early targets. This gave rise to a dedicated subreddit called r/deepfakes where thousands of users shared AI-manipulated videos and adult content. Later, Reddit banned the subreddit.

Reddit continues to be a platform where the content and the technology are shared for others to use. While subreddit “deepfake” is banned, there are numerous AI-generated porn images and videos available on the platform. Although the platform has a “safe search” option to hide mature content, “AI porn” search returns more than a hundred communities facilitating the creation and distribution of AI-based pornographic images and videos. The subreddit, r/unstable_diffusion has a community of over one lakh members.

The messaging app Telegram also had a bot service. An October 2020 report by Sensity AI, a cybersecurity firm, says that the bot allowed users to create realistic nude images of women. Users just needed to submit the target’s picture to the bot which returned processed stripped images. Sensity found stripped personal pictures of at least 104,852 women were shared publicly.

According to an article in Wired magazine, the bot sent out a gallery of such images to an associated Telegram channel with almost 25,000 subscribers in the same month. Another channel, serving as a platform for promoting the bot, had over 50,000 subscribers.

Microsoft’s cloud-based software development platform GitHub also offers ways to engage in the business. DeepFaceLab, a deepfake-generating app, is hosted by the platform. Multiple versions of the DeepNude code, which powered the Telegram bot undressing women, were available on the platform in 2020, after which these versions were removed.

Putting Up Guard Rails

Social media platforms have started to put measures in place. X has restrictions on adult content and allows users to filter out sensitive content through its settings. Instagram allows users to report inappropriate content. Telegram allows users to report and block channels that contain pornographic content.

Despite these measures, the proportion of AI and celebrity deepfakes in the porn industry is increasing, and non-consensual pornographic content is still prevalent on these platforms. Meel, the cyber security professional, says, “They cannot block the content upfront until they receive a complaint. It is partially controlled and manageable, it cannot be controlled 100% on social media.”

Raj Pagariya, partner, technology law at The Cyber Blog India, says “Given the size of the platforms and their user base, I think there is a pressing need that their response time is better, the reporting options are more simplified and are easily accessible for not just people who are working in this domain but also for the public at large,” he says.

In India, there are legal provisions such as Section 66E of the Information Technology Act, 2000 that can be used to safeguard privacy. There are other legislations preventing obscenity, combating pornography-related offences, preventing harming of reputation or spreading false information. Violators can face imprisonment for up to three years or a fine of up to Rs 2 lakh. However, Pagariya points to a loophole. “This provision talks about recording without consent but in deepfake, the content is generated. This is the one major difference that is preventing [Section] 66E from being applicable in cases like these,” he says.

“Various other sections including Section 67 [dealing with transmission of obscene material], Section 67A [addressing sexually explicit material transmission] and Section 67B [related to child pornography] provide legal grounds for prosecuting such offences,” says Sanhita Chauriha, project fellow at Vidhi Centre for Legal Policy, Delhi.

Still Not Enough

The Information Technology (IT) Rules, 2021 provide grounds on which any content can be removed from a platform. Rule 3(1)(b)(ii) mandates that intermediaries observe due diligence where content falls under obscene, pornographic, paedophilic, invasive of another’s privacy, racially/ethnically objectionable or other such categories. But not every platform complies.

“The rules mention that if the platform is not complying and not replying within 30 days, then one can report to Grievance Appellate Committees. If you key in a name [on the website] that is not recognised as an intermediary by the committee, there is a very good chance that they will not even admit your appeal,” says Pagariya.

Section 8(5) of the Digital Personal Data Protection Act, 2023 makes it obligatory on the data fiduciary (an entity that determines the means and purpose for the use of personal data) to protect all personal data in its possession from any breach.

With the proliferation of deepfake pornography, it is evident that the existing provisions are not enough. It demands urgent attention to protect individuals, especially women, from harm.