Alibaba launched RynnBrain, an open-source AI model designed for physical robotics tasks

Superior benchmark results were claimed against Google’s Gemini Robotics and Nvidia’s Cosmos

Three specialized variants (Plan, Nav, and CoP) target manipulation, navigation, and spatial reasoning

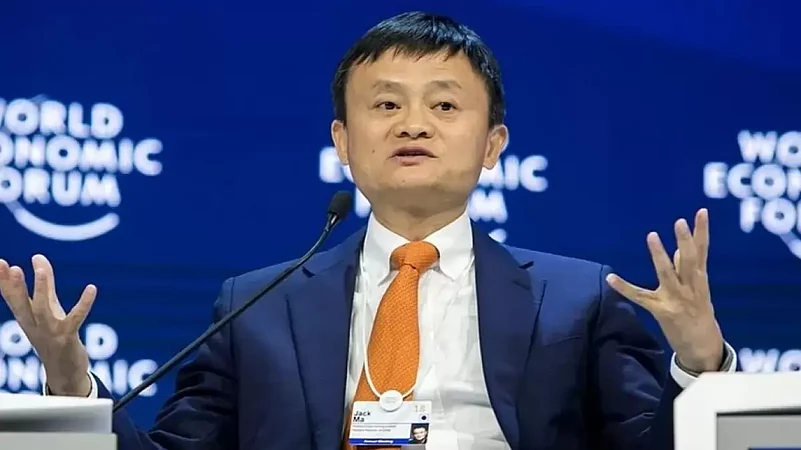

Chinese conglomerate Alibaba Group on Monday released an AI model called RynnBrain that is designed to help robots and other devices perform real-world tasks, Bloomberg reported.

According to the model’s description, RynnBrain can reportedly assist with object mapping, trajectory prediction, and navigation in complex, cluttered environments such as kitchens or factory assembly lines.

The open-source foundation model has been developed by Alibaba’s research arm, DAMO Academy (Discovery, Adventure, Momentum, and Outlook). It is reportedly built to actively engage with its surroundings, understand how space changes over time, and determine the sequence of steps needed to complete a task.

What is RynnBrain?

RynnBrain is designed to ground its intelligence in the physical world using egocentric perception, precise spatiotemporal understanding, and real-world task planning.

This reportedly allows the model to reason in a physics-aware manner and execute complex, embodied tasks. Alibaba has released the model in dense versions with 2 billion and 8 billion parameters, as well as a larger 30 billion parameter mixture-of-experts (MoE) version. In addition, the company has introduced three specialised variants: RynnBrain-Plan for manipulation planning, RynnBrain-Nav for navigation, and RynnBrain-CoP for spatial reasoning.

The model demonstrates strong understanding and can handle fine-grained video analysis tasks such as embodied question answering, object counting, and optical character recognition.

It also offers advanced spatiotemporal localisation, enabling accurate identification of objects, target areas, and motion paths using episodic memory. By combining textual and spatial grounding, RynnBrain anchors its reasoning in physical space, while its physics-aware planning integrates object properties and affordances to help vision-language-action models carry out complex tasks.

Model Details

RynnBrain is trained on Alibaba’s Qwen3-VL vision-language model and is available on platforms such as Hugging Face and GitHub. It comes in multiple versions, ranging from a compact 2-billion-parameter model to a more efficient mixture-of-experts variant.

The model is positioned for use in a strategic area where China and the US are competing for leadership. China has identified robotics, including humanoid robots, as a core focus of its plans to dominate physical AI and transform industries such as manufacturing and hospitality.

Global AI Race

With the launch of RynnBrain, Alibaba has further intensified the global AI race with companies such as Google and Nvidia.

The company claimed state-of-the-art performance on key benchmarks when compared with Google’s Gemini Robotics-ER 1.5 and Nvidia’s Cosmos-Reason2. Chinese firms have largely embraced open-source AI, in contrast to the US approach of keeping advanced technologies proprietary.

This open-source push in physical AI, previously driven mainly by academic institutions such as Stanford University and the University of California, Berkeley, could enable researchers worldwide to improve the technology and potentially narrow the West’s lead in the field.