Nvidia reacted to Google's TPU push, stating it applauds competition

Nvidia argued its chips offer broader performance and flexibility,

Google plans to allow on-premise TPU installation and that Meta is considering a large deployment s

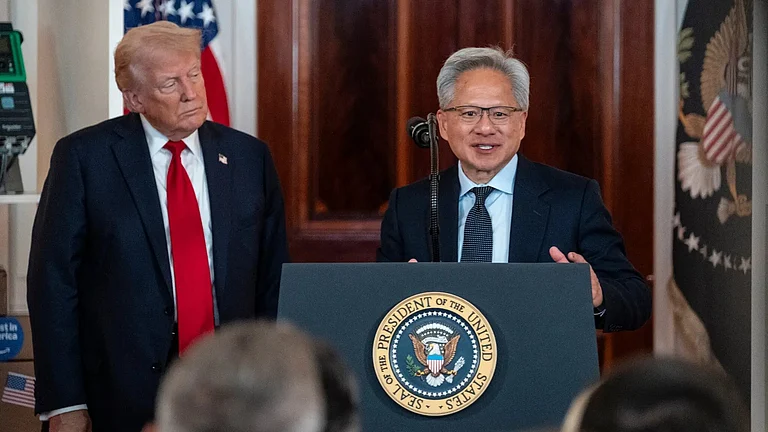

Nvidia on Tuesday reacted to reports that Google is pitching its tensor processing units (TPUs) more broadly, saying it applauds competition while underscoring that its GPU platform remains the industry’s leading, more versatile option.

In a post on X, Nvidia said it was delighted by Google’s success and reiterated that it continues to supply chips to Google. The company argued that its GPUs offer broader performance and flexibility than application-specific chips, adding that Nvidia’s platform, supported by a mature software stack, runs every AI model across the places computing happens.

“We’re delighted by Google’s success — they’ve made great advances in AI and we continue to supply to Google. NVIDIA is a generation ahead of the industry — it’s the only platform that runs every AI model and does it everywhere computing is done,” Nvidia said.

The message was aimed at reassuring customers and investors after market chatter that Google could take share from Nvidia by selling or deploying TPUs more widely.

Market Share, Performance & Supply

Several reports claimed that Google plans to let customers install TPUs in their own data centres and that Meta is exploring both renting TPU capacity and committing to large-scale on-prem deployments starting in 2027.

That prospect rattled markets because Nvidia currently dominates AI accelerators and its GPUs power a vast majority of training and inference workloads. Nvidia framed the contest as one of platform breadth and software ecosystem rather than a pure hardware race.

Technical & Commercial Gaps

Nvidia’s defence relies on two practical advantages: its chips run a wide range of models and frameworks, and its developer tools (notably CUDA) are highly entrenched.

TPUs are ASICs tailored to tensor operations and can be cost-effective for specific workloads, but customers weigh trade-offs, performance per dollar, software portability and the effort of changing infrastructure, before shifting away from GPUs. Market observers also note that foundry capacity (notably at TSMC) constrains how fast any new silicon can scale into production.

Diversification versus Incumbent Strength

Analysts say hyperscalers and large enterprises want supplier diversity to reduce pricing pressure and geopolitical supply risks. Google pitching TPUs for on-prem use addresses customers that prioritise data control or latency, and a marquee buyer such as Meta would validate the approach. Still, Nvidia’s broad software ecosystem, existing installed base and the flexibility of GPUs make it a hard act to displace quickly.

Shifting the market will be hard in practice: on-prem deployments require validated software stacks, integration support and long procurement cycles. Even with promising hardware, new entrants must solve manufacturing scale, tooling and developer adoption before challenging Nvidia’s lead at enterprise scale.