AI-accelerator market may reach $475B by 2030; Nvidia share falls to ~67%

Broadcom set to gain substantial share (~14%); AMD around 4%

XPU trend: heterogeneous processors (CPU+GPU+ASIC+FPGA) offering specialised, efficient compute

Shift driven by demand for cost, vendor-diversity and specialised AI infrastructure

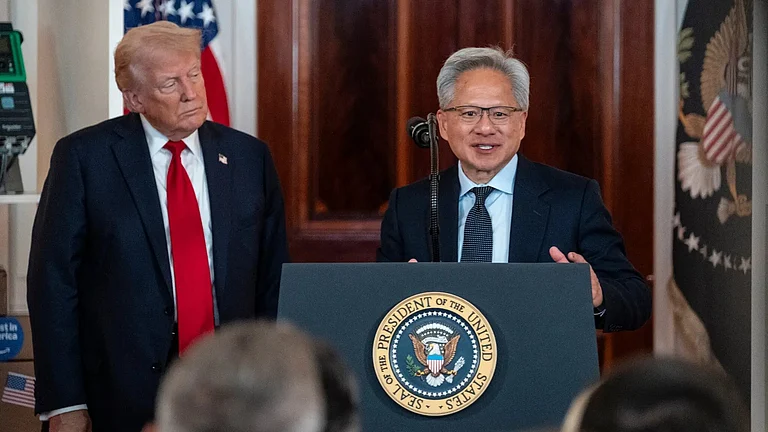

The world's most valuable company, Nvidia’s dominance in the AI chip market is reportedly under threat, as competitors buckle up with stronger and customisable systems.

A Barron’s report (citing analyst Christopher Rolland of Susquehanna) says the AI-accelerator/AI chip market could be worth $475 billion by 2030. However, Nvidia, which currently dominates the AI chip market with around 80% market share is forecast to fall to about 67% by 2030. The report states that this will occur as customers look for cost and vendor-diversity alternatives.

Under Rolland’s view, Broadcom would become the second largest shareholder with around 14% of the market (≈ $65 billion) and AMD would have just over 4% (≈ $20 billion). Barron's piece cites Rolland’s research and summarises his revenue/share projections and price targets for the stocks mentioned.

Broadcom in its recent earnings call announced that a large customer had put in orders for $10 billion in custom AI chips, which the company calls XPUs.

XPUs: Alternative to GPUs?

A recent HPC Wire report stated that the AI revolution has triggered huge demand for computing infrastructure capable of training the biggest foundation models. Nvidia still dominates the high-end AI accelerator market with its enterprise GPUs, but the company, with a $4 trillion market cap has reportedly not been able to meet surging demand.

Intel in a 2021 blogpost had talked about this issue. It stated, “There is no longer a one size fits all architecture for the diversity of today’s data. Specialised architectures have shown that they can optimise performance, power, or latency as needed, but getting the right balance for a gaming GPU is vastly different than what is needed for a battery-operated security camera.”

In a nutshell, it says that there are separate processors for different use cases but there isn’t one processor or consolidated system that can cater to all major use cases collectively. Hence, the ability to create a broad set of architectures targeting an even broader set of workloads is the essence of Intel’s XPU strategy.

An XPU is not a single product but a category of processors or architectures capable of handling multiple types of workloads, including AI, graphics, HPC, analytics, and traditional tasks, through a unified platform.

The term was popularised by Intel when it started building a portfolio beyond CPUs and GPUs. The chip giant positioned “XPU” as a family of architectures (CPU + GPU + FPGA + AI ASICs) under one umbrella.

The “X” in XPU is often interpreted as a placeholder for “any” or “cross-domain,” signifying flexibility and extensibility. It isn’t one single chip type, but rather a concept and direction in processor architecture where heterogeneous computing resources are unified or designed to work together.

Tech Strive for XPU

Examples of XPUs in action can be seen across several leading technology companies. Intel’s XPU vision brings together Xeon CPUs, Xe GPUs, FPGAs and AI accelerators under a unified stack to handle diverse workloads seamlessly.

Nvidia’s Grace Hopper Superchip combines a CPU (Grace) with a GPU (Hopper) in a tightly coupled heterogeneous processor, representing a strong XPU-like design.

Similarly, Apple’s M-series chips also follow this approach by integrating the CPU, GPU, and Neural Engine on a single die, effectively functioning as an XPU for consumer devices. Even earlier, AMD’s Accelerated Processing Units (APUs) served as an initial attempt at CPU and GPU integration, laying the foundation for today’s XPU models.

Chronology of Compute

CPUs started as general-purpose engines optimised for flexibility and single-thread performance, but they aren’t energy efficient for massively parallel tasks. GPUs emerged initially to handle graphics but then moved to process general-purpose parallel math. Now, it offers much higher throughput for data-parallel workloads like deep learning.

CPU is the general-purpose brain of a computer, optimised for a wide range of tasks and good at serial/branch-heavy code, OS services and control logic. GPUs are massively parallel processors built to run thousands of simple, similar operations at once (originally for rendering pixels).

That parallelism makes them excellent for dense linear algebra and data-parallel workloads like neural-network training, simulations, and video processing, where throughput and memory bandwidth matter more than single-thread latency.

As ML and other specialised workloads grew, domain-specific accelerators (TPUs, NPUs) and reconfigurable hardware (FPGAs) delivered huge efficiency gains for particular tasks, but they created silos with different toolchains and memory models.

NPUs / TPUs (Tensor Processing Unit) are domain-specific accelerators designed specifically for machine-learning math (matrix multiplies, convolutions). They’re tuned for high efficiency and low power on inference and/or training workloads, delivering much higher performance-per-watt for neural nets than CPUs and often better than general-purpose GPUs for certain ML tasks.

Similarly, FPGAs (field-programmable gate arrays) are reconfigurable hardware: arrays of logic blocks you can program to implement custom circuits. They sit between ASICs and software in flexibility, far more energy-efficient than software on a CPU for fixed workloads, and more adaptable than fixed silicon, making them useful for specialized processing, low-latency networking, and prototyping hardware accelerators.

The goal is to match each workload to the most efficient engine while minimising data movement and developer friction through shared memory/coherency and portable programming layers.