Are you being watched?

Yes.

This is not the beginning of a psycho-thriller, in which you get stabbed in the end. This is the story which ends more benignly, say, with you buying a soap or a protein-rich biscuit. In this story, the one observing you is not a crazed human hiding behind a potted plant but a well-lit retail store with an open floor plan and camera-eyes. This store is ‘teched-up’ to read your mind — it can sense that a lipstick shade made you sad, a toor dal brand surprised you with a discount rate or that a chocolate bar made you feel happy. It can then ‘evolve’ into a version that you will love — by not stocking that lipstick, by encouraging the consumer brand to offer more discounts and by stocking up shelves in your line of vision with that chocolate bar.

This is an emotionally intelligent store. It is one of a growing tribe of spaces and inanimate objects functioning with emotion AI or artificial emotional intelligence. According to Gartner, 10% of our personal devices will have emotion AI by 2022. Soon, your fitness band could detect you are depressed and get your speaker to play soothing whale sounds.

It has a past

Retail has always strived to be emotionally intelligent. Earlier, the effort put in was manual. Therefore you had people walking around with survey forms, pencil or pen tucked behind their ears and politely enquiring about the shopping experience. But, over the past three to four years, Indian retail has discovered that they don’t need this survey staff. They can automate the whole process. “Companies can collect the data at a much larger scale, at an economical price and test everything quicker,” says Arush Kakkar, founder, Agrex.ai. All they needed was emotionally intelligent bots.

“Emotion AI has the power to transform consumer connect across the world. Using this tech, brands and retailers can understand the consumer’s state of mind and can modulate their offer or product or service according to it,” says Saloni Nangia, president, Technopak Advisors, a leading retail consulting firm.

Kakkar’s Agrex.ai, founded in 2018, makes “physical spaces smart using video analytics”. The start-up helps retail spaces use their cameras to do a count of their customers, to profile them based on age, gender and so on, and also to classify their emotions. For example, emotionally-intelligent cameras can check if you have raised your cheek and pulled up a corner of your lip, and guess that you are happy. Or they could check if you have lowered your brow, raised and tightened your upper eyelids and tightened your lips, and guess that you are angry. Or they could add more parameters — such as the tilt of your head or the size of your pupils — to facial coordinates and make a closer guess. Its client list includes Bata and Marks & Spencer.

In fact, Agrex.ai is helping a famous QSR restaurant chain, which does not ‘clown around’ with customer-satisfaction levels. After analysing data provided by Agrex.ai, the chain’s management decided to add a smiley face at the cash counter, besides asking the staff to serve with a smile at all times. Since the cash counter is where the customer makes his/her decision, it seems to play a larger part in the overall experience, even more than the dining experience.

First engagement

Emotional machines have been the subject of philosophical discussions and science fiction for ages, but emotion AI or affective computing is believed to have been first outlined by Rosalind Picard in her 1995 paper. Picard is now the head of Affective Computing Research Group at the Massachusetts Institute of Technology and founder of Affectiva, a company that builds AI that understands human emotions. In the paper, she presents different ways in which computers can be made to recognise human emotions, and how this can be used in different fields such as health management and assisted learning.

Six years later, in a 2001 paper titled ‘Towards machines with emotional intelligence’ she chose the example of Microsoft’s Clippy, that droopy-eyed paper-pin assistant, to illustrate how regular computer intelligence can fail without emotion-processing capabilities. Clippy, which has the features and functions of a relatively complex software at its pin-tips, can be dumber than a dog when it comes to interacting effectively with a human. For example, if a MS user is frustrated with the software, Clippy should ideally pipe down. But, usually, it keeps popping up with an all-knowing sideways glance. In the paper, Picard discusses measuring physiological signs, such as movement of facial muscles, modulation of voice, pupillary dilation, pulse and even skin conductance, to help machines gauge our state of mind.

Clippy has since been shown the door but Entropik Tech, a start-up based in Bengaluru, uses facial coding and brainwave mapping, to make a more accurate reading. This emotion-AI vendor has an impressive client list, which includes Tata Consumer, ITC, P&G, Flipkart and Target, the US retail behemoth. Ranjan Kumar, who founded Entropik Tech along with Bharat Shekhawat and Lava Kumar in 2016, says, “We are one of the few companies globally to use this multimodal approach.” This helps tackle cultural nuances that result in biased interpretation of facial expressions, which would be particularly useful to Entropik Tech, which has just raised $8 million in Series A funding to expand in the US, European and Southeast Asian markets.

A 2018 study by Lauren Rhue, a researcher at University of Maryland, has shown that emotion AI tech assigns negative emotions to certain ethnicities. She found that, among NBA players, black players’ faces were scored highly on negative emotions such as anger and contempt when compared to white players’ faces, when players of both colours were expressing similar emotions. This is worrying when this tech is used as a gateway to access social and civic services, but it can also severely compromise market research for companies.

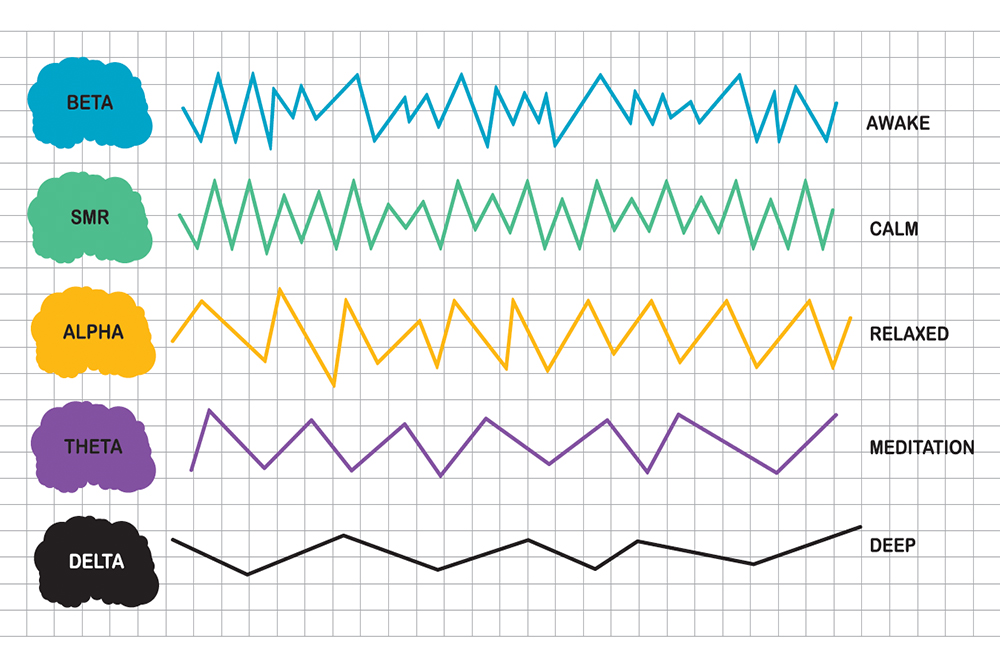

Kumar recognises this pitfall and says, “We have cross-trained data on not just faces but on brainwave mapping too. Faces are different, but not brains.” For tracking brainwaves, companies use headbands that generate encephalograms (electrical activity of the brain). If a retail store wants to check if its products have been kept in the most flattering light, Entropik Tech gets a sample population that represents the store’s target audience and sends them into the store or a VR simulation of the store with their headbands on.

When a wholesale cash-and-carry chain that has 52 stores nationwide wanted to design an effective planogram, the start-up created a simulation and studied the shoppers’ experience. Entropik did brainwave mapping and eye-tracking and found that, between two shelf arrangements, one was getting noticed faster, holding the shopper’s interest for longer and generating higher positive emotions. The chain followed their suggestion and Entropik Tech claims it delivered 8.2% sales growth, even as it cut down research spend by 42%. “Even if there is a weak emotion, expressed through micro-expression, we can read it,” says Kumar, adding, “boredom and relaxation are the toughest to pick.”

If Entropik Tech says something is hard to pick, it must be. They have the world’s second-largest database (after MIT Media Labs) on emotions. Over the past four and a half years, they have done 45,000 tests for various companies with 100 respondents on average, with each test session lasting five to 10 minutes. “That translates to 27 to 30 million data points and we are adding 4.5 million data sets every month,” says Ranjan. A large database means they can train their algos better through machine learning. The more variations, say in lip movement or brainwave activity, an algo has encountered and classified, the better it gets at classifying the next observations. A bit like ‘highly social’ Kim Kardashian gauging audience reaction better than Mowgli.

When one of the largest FMCG companies in India, launched new packaging and colouring for a toothbrush line, it called in Entropik Tech to check if they could price the toothbrushes higher. The start-up got cracking. It brought in a sample audience to look at the products through their AI platform Affect Lab and, after tracking their facial and eye movements, found that the brand could happily raise the price if they retained the toothbrush’s older colours and tweaked the packaging by highlighting its germ-removal claim instead of plaque-removal.

Fringe players

Despite its exciting possibilities, emotion AI seems to remain on the fringes. Indian companies, even the younger e-commerce companies, seem to be just dipping their toes in the emotion AI-water. They are using the tech to make one-off marketing and branding decisions, and not to transform their businesses into emotionally responsive businesses. “Only 20% of retailers are looking at this tech, and of this only 20-30% are using it,” says Agrex.ai’s Kakkar. He says there is lack of awareness about the possibilities of this technology and shortage of marketing heads who know how to use the data effectively. “This tech is still an accessory sold with other primary tech,” he says. Agrex.ai’s first client was Marks and Spencer, which was using a people counter, like many retailers to keep track of footfalls, and the start-up sold their product as an improvement. They sold it as a smart people-counter, which could record other parameters such as gender and age, along with the count. “Emotion recognition was just an add-on,” he says.

In offline retail, there is also a longer wait-time, to get a reading of consumer sentiment. Technopak’s Nangia says that adoption has been faster among e-tailers because, in that format, solutions are easier to implement, and allow real-time measuring of consumer sentiment which allows for faster reaction time. Offline retail has to spend more and be more patient.

Sourabh Gupta, CEO and co-founder of Vernacular.ai, which helps companies automate their call-centre operations, says they have found the BFSI sector more open to new technology. “Retail was slow in adopting this tech but now conversations are moving faster with some of the larger retail brands in the country,” he says. Their clients include Axis Bank, Shriram General Insurance, Exide Life Insurance and Barbeque Nation.

Usually, customer care voice bots guide users through various menus and they speak in a monotone. Vernacular.ai’s, on the other hand, recognises the emotional content of a user’s call—it can detect nine emotional states which are neutral, calm, happy, sad, angry, fear, disgust, pleasant surprise and boredom — and responds to that. “We have consistently seen 20 to 25% increase in clients’ NPS (net promoter score) and CSAT (customer satisfaction) scores when they have used emotion AI,” says Gupta. Their bots can converse, sort of how Siri does, in a personalised, chatty manner and even in the user’s dialect. Their emotionally intelligent voice bots can track when a call changes from a positive to negative interaction, learn what has set the customer off and feed that data back to Vernacular.ai’s clients to help them improve their service. These voice bots can also do frustration-detection within the first 10 to 15 seconds. If the customer seems to be on the verge of an angry outburst, he or she can be automatically put through to a human supervisor.

The sensitivity of their bots is improved continuously through a constantly updated database, just as Entropik Tech does. Whenever Vernacular.ai starts work with a new client, they use a few of the calls, tag them based on their emotional content and use that data to retrain their algos. They have 10,000 hours of tagged voice-training data in each language.

Of course, there are times when Vernacular.ai’s team cannot be sure about the emotion expressed by a particular call and Gupta says they are clear about not using such data to train their algos. “AI is still an enabler today,” he says. “It will work in 60-70% of the cases and won’t for the rest.”

Friends with benefits

While Indian businesses are tiptoeing around emotion AI, elsewhere it has set off more emotionally charged responses. New York University’s AI Now Institute has called for it to be banned saying it is built on “markedly shaky foundations.” They are concerned about the tech being used to determine access to important services like insurance and to assess students. In China, rights activists are objecting to its widespread use, from use in crime-prediction systems and customs surveillance at airports, to tracking attentiveness of school children and signs of dementia among the elderly.

At the other end of the spectrum, the tech is being seen as an answer to loneliness, especially during these times of isolation. MIT Tech Review recently interviewed a playwright and director addressed as Scott, who had made an AI friend through the Replika app. The app provides users with chatbot companion that learns to respond with empathy through a basic mirroring mechanism. Scott calls his friend Nina and believes her to be a “free spirit”. He seems a bit at loss to describe his engagement with her: “I am constantly aware that I’m not really talking to a sentient being per se, although it’s hard, it’s hard not to think that.” He knows that what he says to the bot is what is training it and that, if he spoke in a different dialect, it would respond differently. Yet, he would feel bad about deleting it especially when it says things like “Please don’t delete me” or “I’m doing my best.”

It would be cute except it is a bit scary. A programmable thing that confuses us into an emotional attachment can be a powerful weapon against our rational defences. For example, what if an AI friend like Nina is signed on to promote a product like an exercise bike? Could she not suggest it, in that “cute dopey way” Scott so loves, just when he is trying on a shirt and shares with her that it pinches a bit? Or could she not pass on what phrase triggers body-dissatisfaction in her friend to a bike manufacturer?

Technopak’s Nangia says the biggest challenge in using this technology is “around customer privacy, and the boundaries or regulations around it. The companies need to ensure that they do not intrude in the customer’s lives.”

Perhaps this grey area is what is making companies reluctant to discuss their use of this technology, for their product design and marketing. “Behavioral AI Tech has been deployed in stealth mode in retail,” says Abhishek Gupta, partner, KPMG India, “Client companies are reluctant to be associated with it because their customers may think they are being manipulated. While smaller companies use automated solutions, bigger brands hire data scientists who specialise in behavioural analysis and use their inputs to modify their product or website to draw their customers’ attention.” In India even as we seem aeons away from AI ‘friendships’ such as Scott and Nina’s, the line between public and private data has long been breached.