Artificial intelligence is no longer just about breakthroughs in labs or pumping billions of dollars into data centres — it’s in our hospitals, courtrooms, classrooms, and on the battlefield. At Outlook Business, we believe that India needs a sharp, nuanced, and people-first lens on this transformation.

The Inference is our attempt to make sense of a world being rewritten by AI. In this newsletter, we bring you frontline narratives, boardroom insights, and data you can trust. Whether you’re an investor, founder, policymaker, or just curious — this is where the signal cuts through the noise.

In this edition of the newsletter:

Your finances are subject to AI risk

Arjun Reddy’s battle for India’s DeepSeek moment

India’s GCCs are racing ahead on agentic AI

Phone of friend or a fraud?

Humans In The Loop

Your finances are subject to AI risk

Rohan, a marketing professional, had been using ChatGPT for small personal finance queries for months. Budgeting tips, stock analysis and quick comparisons. But last week he took a bigger leap. He signed up on an online platform that claimed to offer AI powered investment advice, promising personalised recommendations with “no human bias”. The platform generated a neat asset allocation plan, complete with buy and sell suggestions.

Asking generative tools for basic guidance is now routine. But handing over financial decisions to platforms that claim AI driven advisory is an entirely different proposition, and that is where the regulatory fog begins.

“Disclosure makes a user aware that the advice is AI generated but does not fully educate users on potential risks,” says Shilpa Mankar Ahluwalia, Partner at Shardul Amarchand Mangaldas. SEBI’s December 2024 amendment requires registered advisers to disclose AI use and holds them fully responsible for the final advice. But Shilpa notes that disclosure alone does not address deeper issues like design flaws, biases and gaps in data.

The risks sit inside the systems. Many of these tools are trained on data that does not reflect Indian investor behaviour or domestic market patterns. Even when they use local information, the opacity means users have no way of knowing what data went in, how often it is refreshed, or whether the model understands how Indian tax treatment, risk profiles or cash flows work. The answer looks tidy on the screen, but the user has no way of knowing how the model arrived there.

Investor sentiment, meanwhile, has surged ahead. A 2025 CFA Institute survey shows that 91 percent of young Indian graduates still trust human advisers most, yet 83 percent also trust AI assistants for guidance. Amit Tandon, Managing Director at IiAS, says users often forget that the same prompt can produce different answers minutes apart. The suggestions sound objective, yet the logic can shift between prompts.

SEBI’s amendment is a first step. It ensures accountability for advisers who voluntarily adopt AI tools, but it does not yet address unregistered platforms offering automated buy or sell signals. Shilpa believes this gap will need attention soon. India eventually had to build an umbrella data protection law, and in her view the AI advisory segment may require a similar framework.

As investors explore AI tools more aggressively, advisers are watching the trend with curiosity and concern. Santosh Joseph, Managing Director at Germinate Investor Services, believes AI can help with the early stages of the advisory process, such as sorting information or collecting inputs. But the final layer still belongs to humans. “Human AI collaboration is going to be the best for investors and for the industry,” he says. Algorithms can support decision making, but they cannot replace the trust and conversation that shape a person’s real financial choices.

From The Trenches

Arjun Reddy’s battle for India’s DeepSeek moment

Imagine running a serious coding-grade AI model on a single GPU. No cloud dependency, no queueing for compute, no data slipping out of your organisation. A bank analyst, a hospital IT head or an engineer inside a government department could have a capable model running locally on a workstation or even a high-end laptop.

Arjun Reddy, the Madurai-based founder of AI start-up VibeStudio, says it is possible.

His company has pruned a top AI model called MiniMax M2 by 55% while retaining roughly 80% of its capability, making it lightweight enough to run on one GPU or even a MacBook Pro. This smaller version has already crossed more than 150,000 downloads.

But his ambition is to precipitate India’s DeepSeek moment by creating a homegrown LLM at a low cost.

“Give me twenty million dollars and eight months, and we can build a sovereign three-billion-parameter mixture-of-experts (MoE) model,” he claims.

It is a bold claim in a year dominated by Chinese open-source models like DeepSeek and Qwen, when the Indian tech ecosystem faced a tough question: why couldn’t we do it?

Reddy’s prescription is blunt. “This is like nuclear or ISRO. You cannot leave it to private capital alone,” he says. Foundational models, in his view, require state-scale support in the same way India built satellites, rockets and the atomic programme.

He argues India does not need to chase parameter counts. It needs lean and efficient models, engineered intelligently and trained end-to-end in the country. Without sovereign models, India faces job losses at a scale the IT services boom has never prepared it for. Productivity gains from foreign AI will concentrate value outside India, he argues, and could even trigger social unrest.

“We are one year away from the event horizon…We are at war, we just do not realise it,” Reddy says.

Numbers Speak

India’s GCCs are racing ahead on Agentic AI

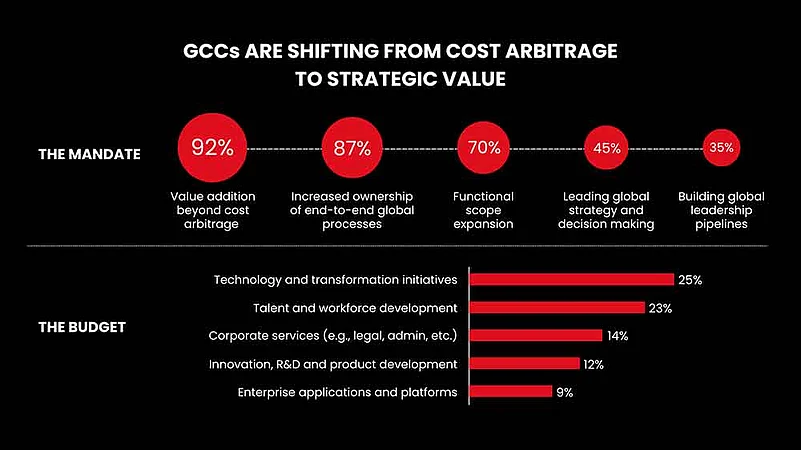

EY’s GCC Pulse Survey 2025 shows that India’s global capability centres have moved far beyond trial runs. Nearly 83 percent of GCCs in India are already investing in GenAI, and a striking 58 percent have begun piloting agentic AI systems. For a technology still early in its enterprise cycle, that number says a lot about how fast these centres are shifting from back-office execution to frontier engineering.

Where is this momentum being directed? The report finds that GCCs are using GenAI to strengthen customer service, finance, and operations, with 65 percent deploying it in support channels, 53 percent in finance workflows, and another 49 percent in operations. IT and cybersecurity follow at 45 percent, signalling an intent to anchor AI deeper within core systems.

“Companies are rethinking how their global operations run. Long-standing issues like fragmented processes are finally being addressed by consolidating entire workflows under one roof, giving GCCs a central role in driving automation,” said Arindam Sen, Partner and GCC Sector Leader, Technology, Media and Entertainment, and Telecommunications, EY India.

This shift is also changing how these centres think about innovation. EY notes that two-thirds of GCCs are now building dedicated innovation teams and internal incubation platforms, aiming to convert in-house ideas into products that can scale globally. In other words, AI is reshaping the ambition of these centres.

Words of Caution

Call from a friend or a fraud?

In Indore, a man recently lost ₹1.8 lakh after scammers used an AI generated voice to impersonate his brother-in-law living abroad. The call sounded urgent, the tone felt familiar, and the panic felt real. By the time he realised something was off, the money was gone.

Security firms say this is no longer an isolated story. Pindrop reports a 756 percent year on year jump in deepfaked or replayed voices on enterprise phone calls. What was once a wily hack has now become an industrial-grade fraud as synthetic tools get cheaper and more realistic.

Fraud is simply following workflows that have moved online. Recruitment, approvals, ID checks and customer conversations now happen on video calls or remote systems. Verification processes that were designed for a different technological era are struggling to keep up. Pindrop’s analysis shows that nearly one in four North Korean IT applicants for remote jobs use deepfaked audio or video to mask identity.

The shift is quiet but clear. Deepfakes are no longer tomorrow’s threat, they are already part of everyday fraud. Organisations and individuals will need stronger guardrails and modern verification, before voice, or even a face on a screen, becomes the weakest link in digital trust.

Best of our AI coverage

Dear Sam Altman, Indian Start-Ups Have A Wishlist For You (Read)

India’s Healthcare AI Start-ups Grapple with a Broken Data Ecosystem (Read)

As AI Anxiety Grips Top MBA Campuses, the ‘McKinsey, BCG, Bain’ Dream Flickers (Read)

AI Start-Ups Ride a Wave of ‘Curiosity Revenue’, VCs Rethink What It’s Worth (Read)

What Exactly Is an ‘AI Start-Up’ — and Does India Have 5,000 of Them? (Read)