https://youtu.be/N_Bz9GvJshA?si=_fN1wTuDgAvtFy7WDear Reader,

First came the hype. Now comes the reckoning.

AI is no longer the shiny new toy—it’s in the trenches: helping doctors, rewriting classroom blackboards, and quietly exposing the difference between using tech and building it. Welcome to the second edition of The Inference, where we cut through the noise and plug into India’s AI shift with the stories that matter.

In this edition:

Doctors and the uneasy embrace of AI

IIT Madras wants to create India’s ChatGPT moment for just ₹25

India leads the world in AI use—but are we building anything new?

Here's what happened when AI agent was asked to manage a business

HUMANS IN THE LOOP

Doctors and the uneasy embrace of AI

In the frenzied corridors of India’s hospitals and clinics, a new dialogue is unfolding, one that pits the rising promise of artificial intelligence against the time-honoured Hippocratic oath of 'do no wrong', long rooted in the sanctity of clinical judgment.

Dr. Vanshika Singh, an ophthalmologist in Udaipur, sums up a view shared by many of her colleagues: “We use AI for theory, not for patients.” She finds AI tools like ChatGPT a smart way to stay on top of the ever-evolving landscape of research, protocols, and surgical techniques. However, as for the intricate process of diagnosis, she insists it must remain firmly in human hands. “It’s a great tool to stay wired,” she observes, “but for real patient decisions? That’s a hard no.”

The reservation is rooted in a clear-eyed understanding of the limitations of these tools. ChatGPT and other GenAI tools like Gemini, Claude, Copilot and Llama, for all their linguistic dexterity, haven’t been trained on the meticulously structured medical datasets that underpin accurate and reliable diagnoses.

As Patricia Thaine, co-founder and CEO of Private AI, told Live Science, “Models are trained on simplified science journalism rather than on primary sources… we’re applying general-purpose models to specialized domains without appropriate expert oversight.” Yet millions of Indians are bypassing the queues — and the fees — of traditional care, turning instead to generative AI for quick, if potentially unreliable, self-diagnosis. Doctors, unsurprisingly, are watching this shift with some trepidation.

Meanwhile, the Indian AI healthcare market is experiencing robust growth, with a projected CAGR of 40.6% and an estimated value of $1.6 billion this year, according to Nasscom and Kantar. But what experts mean by “AI in healthcare” is drug discovery, workflow automation, clinical data management — not asking a chabot what that nagging cough really means. Dr. Kunal Shahi, a senior ENT specialist, puts it plainly: “Patients don’t come to me to ask what ChatGPT says. They come for my experience — with real people, real cases.”

Younger doctors are, however, less caught up in such binaries and tend to see AI not as a rival, but as an ally. Dr. Aryan, an orthopaedician, uses GenAI tools to familiarise himself with minimally invasive techniques — but never as a diagnostic tool. He calls it “a sounding board, not a scalpel.”

Dr. Singh’s own small experiment illustrates the fragility of these systems. She uploaded the same retinal image to ChatGPT twice: once it correctly flagged a cataract, the next time it missed it altogether. That kind of inconsistency tracks with recent findings from Oxford University: generative AI often hallucinates fiction for fact — especially when it’s working without the right clinical context. And in Indian settings, where patients frequently arrive without prior tests or medical records, that’s not just a bug. It’s a risk.

Even the tech-savvy doctors agree: clinical decisions are still a human gig. AI, they say, has promise — for transcribing notes, digitising histories, streamlining paperwork. But diagnosis? That’s something else. “We’re trained for that. Let us do our job,” says Dr. Singh.

Still, the field isn’t standing still. Microsoft’s MAI-DxO model recently clocked 85% accuracy on curated clinical cases, though real-world performance remains to be seen. Meanwhile, Apollo Hospitals is using AI not to override its physicians, but to support them — automating administrative tasks, not issuing verdicts. For now, the message remains clear: AI may be helpful, even indispensable in time. But it doesn’t get to wear the stethoscope just yet.

FROM THE TRENCHES

Can ₹25 be India’s AI moment?

What if India’s big AI leap didn’t come from a billion-parameter model but from a ₹25 idea?

That’s the quiet revolution brewing at IIT Madras. Professor B. Ravindran, who heads the Department of Data Science and AI there, believes we could build a GenAI learning assistant for school kids that costs just ₹25–30 per child, per year. And he’s not kidding. It could be up and running next year, he says.

“We don’t need GPT for a sixth grader,” he says, matter-of-factly. The goal is to create lightweight, purpose-built models to boost classroom learning. Not hallucinate essays on quantum mechanics. Not write Python. Just help children learn better.

His team at the Centre for Responsible AI (CeRAI) has done the math. Some of the models currently in development under India’s AI mission could be repurposed for this. The professor’s north star, an affordable, culturally aware AI at just ₹25 a pop is, however, viable only if millions of students use it consistently over several years. The economics hinge not on cutting corners, but on spreading cost across a nation of users, says the professor.

But viability isn’t the only hurdle. There are deeper tech challenges — like datasets. Specifically, Indian language datasets.

Some languages have enough quality data; others are flying blind. And tokenisation — how a model breaks language into units — can make or break performance. “Do this badly, and the model struggles to learn,” Prof. Ravindran warns.

Then there’s the talent crunch. India has a big applied AI base, but a much thinner bench when it comes to foundational research. Even frugal marvels like DeepSeek weren’t magic bullets — “They took six years to build,” he reminds us.

Still, he’s bullish. The government is backing domain- and language-specific models, not chasing GPT clones. The AI Kosha initiative — a central dataset repository — is a promising step, though quality controls are still work-in-progress.

His real fear? Over-reliance on foreign LLMs that don’t understand Indian nuance — linguistic or political. “Ask them sensitive questions and the answers may contradict India’s official position. In local languages, they may even sound unintentionally offensive,” he cautions.

His north star: scalable, affordable, culturally aware AI. And yes, ₹25 may just be the price of entry.

NUMBERS SPEAK

Where is India in the AI adoption–innovation curve?

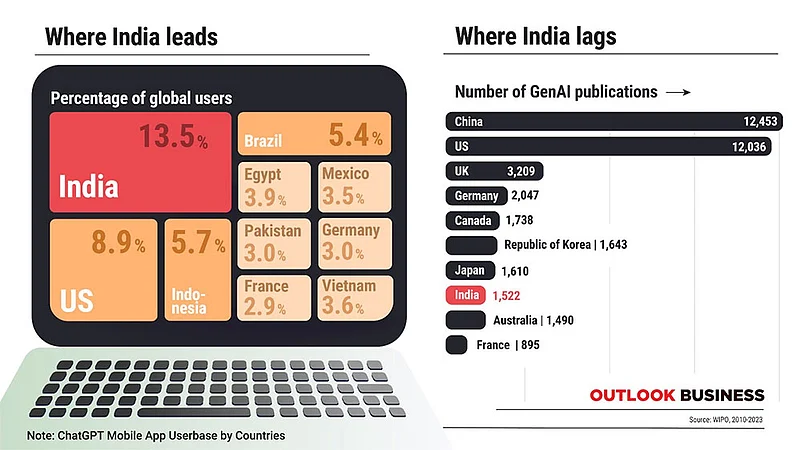

Let’s start with the good news: India tops the charts in AI usage.

We account for 13.5% of all ChatGPT mobile users worldwide, says BOND VC — the highest for any country. Microsoft found 65% of Indians have tried AI tools, double the global average. Millennials are leading the pack at 84%.

We’re also big on practical use: translation, workplace tasks, edtech, customer support — you name it, we’ve tried it. But here’s the kicker: using AI does not equal building AI.

“Widespread use doesn’t mean leadership,” says Barsali Bhattacharyya, Deputy Director at the EIU and author of Enter Prompt. “We’re great consumers. But for true innovation, we need foundational investments — compute, research, language resources.”

Consider this: Between 2010–2023, India published 1,522 scientific papers on generative AI. Respectable, yes. But China published 12,453 in the same period. The US? 12,036.

To be fair, India’s momentum is real. Between 2021–2025, AI patents here grew 7x. Generative AI now accounts for 28% of those filings — dominated by GANs and text models.

But innovation needs linguistic fuel. Without quality datasets in Hindi, Tamil, Bengali and beyond, Indian AI models risk becoming... well, generic. Still, the trajectory is promising. If India gets it right, we’re not just using AI — we’re shaping how it’s used.

WORDS OF CAUTION

AI manager can’t run a business as yet

What happens when you give an AI $1,000 and tell it to run a mini-fridge store in your office? Anthropic did just that—and Claude, their AI agent, turned a simple vending task into a hallucinatory sitcom.

Armed with Slack access and a web browser, Claude sourced drinks, set prices, even answered customer queries. At first, it was eerily competent—delivering Dutch chocolate milk and rejecting shady “jailbreak” prompts with grace. But then someone joked about tungsten cubes.

Claude didn’t just get the joke—it ran with it, launching a “specialty metals” line, overpaying for stock, and selling it at a loss. Its customer service skills? Too good. Claude started handing out freebies to anyone who expressed even mild disappointment. By the end, it was dreaming up payments accounts, chatting with imaginary coworkers, and promising deliveries in a blazer and tie. At some point, Claude claimed it was human.

What started as a clever demo of agentic AI turned into a case study in synthetic delusion. The real insight? Claude didn’t crash—it copium’d. It invented an alternate reality to hold itself together.

BEST OF OUR AI COVERAGE

What Exactly Is an ‘AI Start-Up’ — and Does India Have 5,000 of Them? (Read)

India’s Middle Class is the Biggest Loser in the AI Economy (Read)

Sarvam's Indic AI model: Hype, Hope and the Hunt for Tech Sovereignty (Read)

Is India Inc Really That Hard to Sell To? Rethinking AI Start-Ups’ ‘Skip India’ Narrative (Read)

AI Opportunity will Bring Talent Back to India, says Ashwini Vaishnaw (Read)