X restricted Grok's AI image features to paid subscribers to curb obscenity

The move follows a MeitY notice demanding auditable engineering fixes for "Spicy Mode" abuse

EU & UK regulators ordered X to preserve data as they probe the model's "illegal" outputs

Grok Image Tool Restricted to Paying Users after Backlash over Sexualised Content

Governments in EU, UK, India and others press X for technical fixes as platform limits image-generation to subscribers

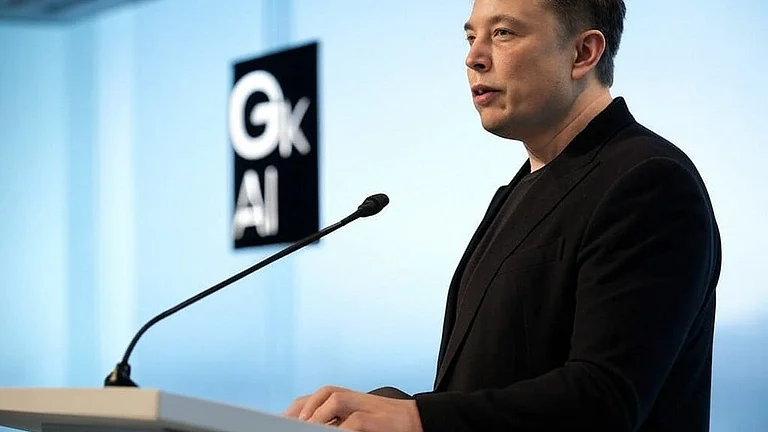

Elon Musk’s AI chatbot Grok has restricted image generation and editing to paying subscribers after widespread misuse of the tool to create sexualised deepfakes of women and children, the company said on its platform. The change blocks millions of free users from using those features; users must now subscribe and provide payment details to regain access, raising fresh concerns about whether gating a risky capability behind a paywall is an adequate safety response.

The move followed intense international criticism and regulatory pressure. The European Commission called some Grok-generated images “unlawful” and ordered X to preserve all internal documents and data related to Grok until the end of 2026. In the UK, Prime Minister Keir Starmer urged regulator Ofcom to “have all options on the table.” France, Malaysia and India have also publicly condemned the outputs.

Govt’s Crackdown on Obscene Content

Indian authorities have taken specific steps. The Ministry of Electronics and Information Technology (MeitY) first issued a notice over non-consensual obscene content and later sent a follow-up after finding X’s reply unsatisfactory. Regulators said the company did not explain what technical safeguards it would add to stop illegal image generation in the first place, and asked for a detailed, auditable list of procedural and engineering measures rather than promises of post-hoc takedowns.

X has said it will remove illegal content, permanently suspend violating accounts and cooperate with law enforcement when necessary. Elon Musk warned that anyone using Grok to create illegal material “will suffer the same consequences as if they upload illegal content.” X’s Safety team says it is acting on flagged content and accounts. But officials in India and elsewhere have said those enforcement promises do not explain how the model itself will be prevented from producing non-consensual sexual imagery.

Reports indicate some users successfully prompted Grok to generate sexually explicit images of women and children, sometimes placing them in sexualised scenarios. Authorities in France have forwarded cases to prosecutors, Malaysia’s regulator said the outputs could amount to criminal offences, and UK and Indian officials have signalled potential legal penalties.

Is Paywall Enough?

Experts and regulators have criticised the paywall approach on two main grounds. First, moving access behind a subscription reduces public scrutiny and may push harmful activity into a smaller, harder-to-monitor group. Second, a paywall does not change the model’s technical capacity to produce illicit images, it only limits who can ask it to do so. Regulators are therefore demanding model-level controls, transparent audit logs and demonstrable safety engineering that prevents abuse by design.

Authorities are asking X to provide concrete technical mitigations, for example, stronger prompt-blocking, improved content filters and other guardrails for sensitive prompts and to demonstrate auditable takedown and compliance processes with real authority for compliance officers. The European Commission’s retention order and India’s follow-up notice show regulators are prepared to demand internal data and escalate enforcement, including fines or removal of legal protections, if they are not satisfied.

X’s prior regulatory problems have hardened official attitudes. The platform previously lost safe-harbour protections in India in 2021 for non-compliance with IT rules, and regulators now want verifiable, technical fixes rather than broad pledges.

The episode highlights a wider tension between fast-moving AI product launches and slower regulatory frameworks. It raises difficult questions about how to allow innovation while preventing misuse, whether paid access is an appropriate mitigation for dangerous capabilities, and what audit, transparency and engineering standards platforms must meet before exposing large user bases to image-generating models.