US chip giant Nvidia, at the latest Computex electronics convention in Taiwan, introduced a new wave of technology aimed at sustaining the surge in demand for AI computing and keeping its products at the forefront of the industry, Bloomberg reported.

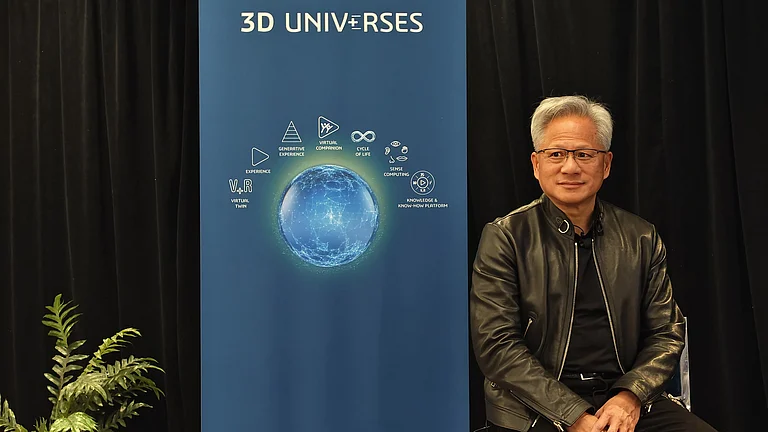

Chief executive officer Jensen Huang opened Asia’s largest technology event on Monday, showcasing new products and strengthening ties with a region critical to the technology supply chain. The company’s stock is surging following a deal-making trip to the Middle East as part of a trade mission led by US president Donald Trump.

Huang returned to Taiwan, where he introduced changes to the ecosystem surrounding Nvidia’s accelerator processors, which are essential for developing and running AI applications and services. The primary goal is to broaden the reach of Nvidia’s products and eliminate barriers to AI adoption across more industries and countries.

“When new markets have to be created, they have to be created starting here, at the centre of the computer ecosystem,” Huang said about Taiwan.

He provided an update on the timeline for Nvidia’s next-generation GB300 systems for artificial-intelligence workloads, stating they will be available in the third quarter of 2025. He claimed these systems will offer improvements over the current top-tier Grace Blackwell AI systems, which are being installed by major cloud service providers.

NVLink Fusion

Nvidia unveiled NVLink Fusion, an advanced version of its NVLink technology, at Computex in Taipei. This new silicon technology enables chip makers to build powerful semi-custom AI systems by facilitating high-speed chip-to-chip communication for multiple interconnected chips. Partners such as Marvell Technology and MediaTek are among the first to adopt NVLink Fusion for their custom AI-chip designs, aiming to meet the demands of data-intensive AI workloads like model training and inference.

Nvidia developed NVLink years ago to enable rapid data exchange between chips, as seen in systems like the GB200, which integrates two Blackwell GPUs with a Grace CPU. NVLink Fusion extends this capability, allowing third-party chip designers to integrate their custom CPUs or accelerators with Nvidia’s ecosystem, enhancing scalability and performance for AI infrastructure.

GB300 Systems

Huang announced that Nvidia’s next-generation GB300 AI systems will start shipping in the third quarter of 2025. These systems will succeed the Grace Blackwell family, Nvidia’s current flagship AI chips used by cloud providers such as Amazon and Microsoft.

The GB300 advances Nvidia’s architectural strategy of tightly integrating CPUs and GPUs, enabling faster AI-model training and inference.